How I Finally Got AI to Write Like Real Authors (and Why It Took 40 Dimensions to Get There)

Every few months I convince myself that something seemingly straightforward will take maybe a weekend to solve. This time, I told myself I could teach an LLM to write like specific authors in about three days.

Three weeks later, I had my answer. Yes, it's absolutely possible for an AI to capture not just the feel of an author but their actual structural DNA. The catch? I had to completely rebuild how we think about writing style itself.

The solution wasn't what I expected. It wasn't a fancy multi-stage pipeline or a clever prompt engineering trick. The breakthrough came from something more fundamental: creating a precise 40-dimensional map of how each author actually constructs prose at a mechanical level.

Let me walk you through what I learned, because the path from failure to success taught me more about writing than the previous decade of just doing it.

Why Everything You Think About Style Is Wrong

When most people talk about writing style, they're really talking about surface texture. They mention short sentences, strong verbs, fewer adverbs. They say things like "make it gritty" or "make it more literary." These descriptors feel intuitive because they're how we experience style as readers, but they completely miss what's actually happening under the hood.

Think about it this way: if you listen to two pianists play the same piece of music, you can hear the difference immediately. One might linger on certain notes, the other might attack each key more sharply. But if you tried to recreate those performances by just saying "play it more emotionally" or "use more dynamics," you'd get nowhere. You'd need to measure actual, specific behaviors like pedal timing, key velocity, rubato patterns, and articulation choices.

Authors work the same way. They have fingerprints, invisible but absolutely mechanical, that span dozens of structural behaviors. Consider how an author handles time itself. Do they move chronologically through events, or do they fracture timelines and layer memories over present action? How literal are their metaphors? When they describe a knife, is it just a knife, or does it carry symbolic weight? How much access do they give you to a character's internal thoughts versus keeping you locked in observable action? Do they see the world as a collection of systems and power structures, or as individual moments of experience? How many sensory channels do they activate? Do you taste, smell, hear, and feel the world, or do you mainly see it?

These aren't subjective preferences or artistic moods. They're measurable, consistent choices that repeat across everything an author writes. And here's the critical part: if you measure them incorrectly or vaguely, you don't get style. You get caricature.

Which is exactly what happened in my early attempts.

When the Model Gets It Spectacularly Wrong

The first version of my system tried to be clever. I fed in passages from dozens of famous authors and asked the model to extract their stylistic signatures automatically. The results were so bad they were almost funny.

The system confidently declared that Hemingway used highly nonlinear time structures at 95% intensity. Anyone who's read "The Old Man and the Sea" knows this is absurd. Hemingway writes in almost pure chronology, one moment following another with minimal temporal disruption.

It claimed William Gibson, the man who practically invented cyberpunk's sensory overload aesthetic, had low sensory density. Gibson's prose is drenched in texture, temperature, color, and proprioception. His sentences make you feel chrome and sweat and neon hum.

It tagged Agatha Christie as having high metaphor usage. Christie's prose is notably literal and clean, almost clinical in its precision. That's part of her genius in mystery writing: no linguistic tricks to hide behind.

At first I thought the model was simply failing at analysis. But as I dug deeper, I realized the problem was more fundamental. The model wasn't bad at reading. It was confused about what the measurements actually meant. Every axis in my style system was too vague, too open to interpretation. When I said "time linearity," what did that actually mean numerically? When I said "metaphor density," how was the model supposed to distinguish between 40% and 60%?

The model was trying its best with definitions that were essentially useless. I was the problem, not the AI.

Rebuilding Style From First Principles

I went back to the drawing board and did something that felt tedious but turned out to be essential: I defined every single stylistic axis with rigorous, specific criteria across eleven distinct levels from 0 to 100.

Take time linearity as an example. I needed to specify exactly what each point on that scale meant. So I defined zero as pure chronological progression where events unfold in simple sequence with no temporal disruption whatsoever. On the opposite end, I defined 100 as fragmented temporal recursion where multiple timelines interweave, memories interrupt present action constantly, and the narrative deliberately disorients the reader about when things are happening. Then I filled in the middle gradations with equal precision.

For metaphor density, zero became completely literal language where objects and actions are described only by their physical properties and direct functions. At 100, nearly every image carries symbolic weight, and metaphorical thinking saturates the prose every few lines. The middle ranges got careful definitions too: at 50%, metaphors appear regularly but don't dominate; they enhance literal description rather than replacing it.

I did this for narrator presence (from completely invisible to dominant and opinionating on everything), for sensory channel activation (from purely visual to fully synesthetic), for sentence complexity, for emotional directness, for technical surface, for how power dynamics get framed, for pacing patterns, for everything.

This process took longer than building the entire original system. But once I had real definitions, something shifted. The model suddenly had clarity. It knew exactly what "70% sensory emphasis" meant, not as a feeling or vibe, but as a set of enforceable rules about how many senses to engage per paragraph and with what intensity.

That turned out to be the entire breakthrough, though I didn't realize it yet.

The Forensic Reading Phase

With clear definitions established, I faced an even more painstaking task: manually recalibrating every author's profile. This wasn't about sitting back and asking "what feels right?" or relying on critical consensus about each writer's style. This was forensic work. I read passages line by line, tagging actual behaviors and counting patterns.

When I analyzed Hemingway properly, the real profile emerged: almost zero metaphor usage, almost no access to character interiority, completely linear time, heavy emphasis on concrete sensory details (especially physical sensations), clipped and structurally simple sentences, and zero narrator commentary. The prose stays stubbornly on surfaces, describing exactly what happens and what can be physically observed.

Gibson's actual fingerprint looked completely different: dense sensory stacking where multiple sense impressions layer in single sentences, high systemic awareness showing how technology and power structures shape experience, metaphors drawn specifically from machinery and urban infrastructure, longer sentences that maintain tight semantic control, strong body-awareness and proprioception, and technical detail deployed without explanatory hand-holding.

Asimov showed yet another distinct pattern: linear logical progression, minimal sensory content, slightly visible narrator who occasionally frames information, moderate technical detail that gets explained when necessary, and sparse metaphor that appears mainly for conceptual clarity rather than aesthetic effect.

Each author, when examined carefully, revealed a unique 40-dimensional fingerprint. And here's what happened once those fingerprints became accurate: the model no longer needed help. It didn't require a second pass to refine output or a separate editing stage to enforce style. One pass became sufficient because the instructions were finally precise enough to follow.

The style enforcement became strong, stable, and eerily consistent.

The Stress Test That Proved It Worked

I needed to know if this was real or if I was just seeing patterns I wanted to see. So I designed a stress test using the scene that eventually became the opening of "The Midnight Lounge": drones entering a cyberpunk bar to conduct some kind of mysterious surveillance operation.

Same story prompt. Same events. Same characters. Same basic scenario. I only swapped the author style map.

The differences were unmistakable. The Hemingway version came out clipped, literal, quiet, and almost emotionally flat. "Four drones came in. They were black. They counted numbers. She watched them." Simple declarations. Surface observations. No interpretation.

The Gibson version emerged dense with sensory information, technical without explanation, rhythmic, saturated with textures and ambient details. You could feel the synthetic hum of rotors, taste the recycled air, sense the electromagnetic presence of surveillance equipment.

The noir version shifted into voice-heavy metaphors and street-level texture, with the narrator's personality bleeding into every description. The drones weren't just drones; they were "mechanical vultures" or "chrome angels of the surveillance state."

The Christie version became procedural and observant, cataloging details with clinical precision. Who entered when. What they said exactly. What actions followed in sequence. Clean, clear, and methodical.

I could read any of these versions blind, without labels, and tell you which author wrote them. That was the moment everything clicked. We weren't imitating authors anymore. We were modeling their narrative machinery at a functional level.

What This Actually Enables

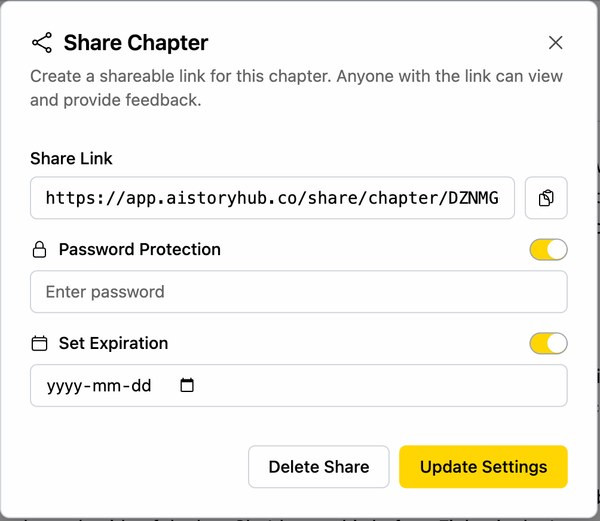

Once you have genuinely accurate style maps, the model can do things that were previously impossible. It can write in a specific voice consistently across long passages without drift or collapse into generic AI-smooth prose. It can maintain that voice through different scenes, different emotional tones, different levels of action or introspection. Most importantly, it keeps the plot and content independent from the style, which means you can tell any kind of story in any author's voice without the voice dictating what happens.

Each author becomes a recognizable narrative physiology, a distinct way of processing and presenting experience that remains stable regardless of what that experience contains.

But the real power goes beyond imitation. Because every axis is now controllable with precision, you can do something more interesting: tune your own style deliberately. You can create hybrid voices by blending parameters from different authors. You can evolve your voice over time by adjusting specific dimensions while keeping others constant. You can experiment with stylistic parameters you might never consciously think about.

Consider something like "systemic awareness," which measures how much the prose acknowledges power structures, institutional forces, and systemic causation versus treating events as products of individual choice and action. Most writers never explicitly decide where they fall on this spectrum. But once you can measure and adjust it, you can see how it shapes everything else about your narrative voice.

Or take "metaphor literalness," which captures whether your metaphors function as decorative comparisons or whether they create actual semantic meaning. You might discover your natural tendency is toward 30% on this scale, but your story would work better at 60%. Now you can make that adjustment consciously.

It's the difference between saying "write like Hemingway" and saying "write with Hemingway's specific narrative machinery: 5% metaphor density, 10% interiority access, 95% time linearity, 80% sensory concreteness, 20% sentence complexity, and 0% narrator presence."

Why One Pass Was Enough

The biggest misconception I had going into this project was that I'd need elaborate multi-pass pipelines. First pass for content, second pass for style enforcement, maybe a third pass for consistency. That's how a lot of advanced AI writing systems work.

Turns out we didn't need any of that. We just needed accurate, quantized, enforceable style definitions. We needed a complete map of an author's narrative behavior with sufficient precision that ambiguity disappeared.

Once we had that clarity, the model didn't need extra help or corrective passes. It just needed to know exactly what the target was. Give it clear enough instructions and it hits the mark in a single generation.

The system now produces writing in any author's voice with roughly 90% structural fidelity, regardless of genre, theme, or story content. It's not mimicry, where you reproduce famous phrases or obvious stylistic tics. It's not vibes, where you approximate a feeling without mechanical accuracy. It's not aesthetic cosplay, where you dress up generic prose in superficial markers.

It's actual narrative identity, captured at the level of structural choices. Codified into measurable dimensions. Controllable through parameter adjustment. Transferable across any content.

This is what I've been chasing since I started experimenting with AI writing tools. Not a system that writes for me, but a system that lets me understand and control the machinery of style itself with the same precision an audio engineer controls frequency bands in a mix.

And after three weeks of complete immersion, countless failed iterations, and one very long night of manual author profiling, it finally works.